What was the main question in your dissertation?

How do people ensure that they understand each other during social interactions

Can you explain the (theoretical) background a bit more?

I addressed my research question from two perspectives that differ from traditional studies in language research. First, I used an interactional perspective to study how people work together to ensure that they understand each other. Most language studies focus on how a single person understands language, such as processing words with multiple meanings or speech in noise. In my dissertation, I looked at how people understand language in conversation, where multiple people use and understand language. For example, I asked what do people do when understanding breaks down?

Second, I used a multimodal perspective to study how people use multiple modalities to interact. In face-to-face conversations, we not only communicate using language; we also communicate using our whole bodies. For example, we can regulate the interaction using our posture, facial expressions, head movements, eye gaze and hand gestures. In my dissertation, I focused on speech and hand gestures, and particularly gestures that visually depict meaning (e.g. bringing your hand to your mouth as if holding a cup to express “drinking”). These gestures are called iconic gestures. Using these two perspectives, I studied how people coordinate their speech and their gestures to ensure that they understand each other during interaction.

Why is it important to answer this question?

We spend a great deal of our lives interacting with others, such as when we catch up with family or friends, or when we work together to cook a meal. During these interactions, it is crucial that the people involved understand each other so they can achieve their shared goal. Without this mutual understanding, they won’t get far and the interaction could break down.

Can you tell us about one particular project?

I focused on a key phenomenon that has been argued to play an important role in establishing mutual understanding: alignment. With alignment I mean repeating the behavior of the other person you interact with, such as when people repeat a word their conversational partner previously produced.

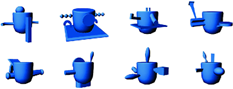

In my dissertation, I studied multimodal alignment: when people repeat each other’s words and gestures. Participants completed a task in which they took turns to describe and find 3D objects, called “Fribbles”(see Figure below). Participants had never seen these objects before, and so they did not have a common name. This communicatively challenging task allowed me to study how people use alignment to ensure mutual understanding when describing unknown objects.

Participants aligned in both speech (reusing words their partner used) and gesture (reusing gestures their partner used), suggesting they aligned multimodally. This multimodal alignment occurred early on in the task. Interestingly, participants did not just “copy” their conversational partner. Instead, they would often alter the utterances and gestures they used to describe the Fribbles. These speech-gesture alternations helped them ensure that they were referring to the same Fribble as their partner.

What was your most interesting finding?

In my dissertation,I also studied how people use speech and gestures to jointly resolve “communication breakdowns”. To investigate this issue, I used the same dataset as described above (the “Fribbles” task) and looked at cases where a listener asked their partner for clarification, suggesting they did not hear or understand what their partner had said.

For the listener, the most efficient way to show trouble in communication would be to say something like “Huh?”, asking their partner to repeat or elaborate. In my research, however, I found that listeners tended to offer a solution to the trouble (e.g., saying “you mean like this ((gesture))?”). These solutions are likely more effortful than a simple “Huh?”, at least in terms of speech and gesture. These offers are typically confirmed without much effort by the partner (for example, they could reply by saying “yes” rather than having to produce a complicated reply). Thus, offering a solution to misunderstanding may require effort from the listener, but it requires the least amount of collaborative effort. I thus concluded that people tend to choose the most cost-efficient strategy to overcome difficulties in their conversation, at least in this task-based setting.

What are the implications of this finding?

In language research, we typically study language from an individual perspective, looking at how individuals produce and process language efficiently. But, the findings on offering solutions shows that people collaborate to resolve troubles in understanding. This collaboration follows principles of co-efficiency: rather than minimizing their own efforts, speakers try to minimize the combined effort needed to resolve communicative trouble, so they can go on with the conversation. I believe that to understand the dynamics of language use we would benefit from more research that looks beyond the single mind.

What do you want to do next?

When I finished my thesis I was sure of one thing: I wanted to continue studying interaction. Although I was open to the idea of studying interactions in a broad sense (not necessarily involving language), I had never thought about the possibility of studying non-human interactions. But then I saw a vacancy at the Meertens Institute for a postdoctoral project on human-cat interactions, and I was sold! (this vacancy was very popular, there was even a newspaper article about it).

I got the job and started in April this year. It may seem like a big jump, but in this project I am still studying interactions from a multimodal perspective. My research focuses on how cats and humans recruit different body parts and vocalizations to communicate meaning in everyday activities such as feeding, petting or playing.

References

Barry, T. J., Griffith, J. W., De Rossi, S., & Hermans, D. (2014). Meet the Fribbles: Novel stimuli for use within behavioural research. Frontiers in Psychology, 5, 103. https://doi.org/10.3389/fpsyg.2014.00103

Dingemanse, M., Liesenfeld, A., Rasenberg, M., Albert, S., Ameka, F. K., Birhane, A., Bolis, D., Cassell, J., Clift, R., & Cuffari, E. (2023). Beyond Single-Mindedness: A Figure-Ground Reversal for the Cognitive Sciences. Cognitive Science, 47(1), e13230.