When someone says something to you, they are letting you know what is going on in their mind. It could be something simple, such as that they like the food, or something more complicated, such as that they are unsure about what to study after the summer. What does it take to make sense of those sentences? And does ChatGPT really understand what you are saying?

The meaning of a sentence

To understand sentences, we have to do quite a bit. First, we have to recognise words in what we see (sign language or written language) or hear (spoken language). This is already a big job, but then it gets really complicated: we have to use the meaning of those words to build the sentence. After all, a sentence means more than the individual words it contains.

The meaning of a sentence is always a combination of at least two things: the meaning of the words and how we combine those words. We know this because some sentences can have two meanings, while all the words are exactly the same. Linguists call this ambiguity. An example of such a sentence is:

(1) Rose saw the man with the binoculars.

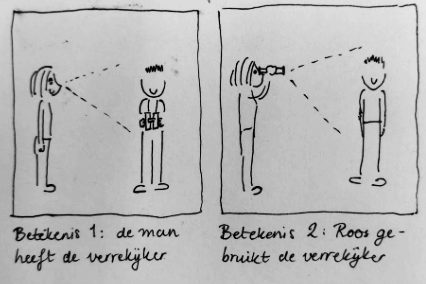

The two meanings come about when we combine the words in a different way. If we combine ‘the man’ with ‘with the binoculars’, Roos sees a man carrying a pair of binoculars (meaning 1). But we can also combine ‘with the binoculars’ with ‘Rose’: in that case, Rose uses binoculars to see the man (meaning 2).

How does ChatGPT work?

ChatGPT is a ‘Large Language Model’ (LLM). Large Language Models are trained using a large amount of text to predict what the next word in a sentence will be (read more here). In each case, ChatGPT uses the context to rank all words known to the model from most- to least-predictable. The most predictable word is added to the answer. If you do this often enough, you get beautiful sentences that make us suspect ChatGPT is sharing thoughts with us.

So ChatGPT does have a certain knowledge of language, and some researchers think that the way ChatGPT learns and uses language may well be similar to how we humans do – although far from everyone agrees (read more here). Even if a comparison could be made, there remain very big differences between the way ChatGPT processes language and the way humans do. One important distinction is that ‘predicting the next word’ is not the same as ‘understanding what is being said’. Imagine you are in a country where you do not understand the language, and every day you pass a banner with words on it. If you see that banner often enough, you can learn the order of the words, but you don’t know what the inscription means.

This is exactly what ChatGPT does. All major language models use knowledge about which words are often used together to create sentences that are likely to be correct. So ChatGPT is very good at memorising word combinations, but has no knowledge of the building blocks of meaning: the abstract structure and meaning of the words.

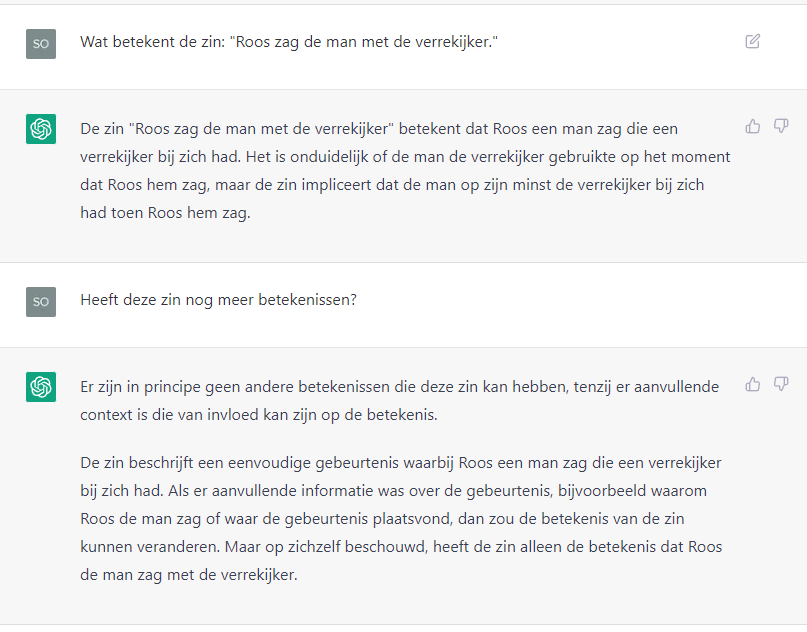

So what would happen if we ask ChatGPT what the sentence in (1) means? Indeed: we get an answer consisting of correct sentences, but ChatGPT only gives us the most likely interpretation of the sentence, and not both (see the image below).

Translation:

– What does the sentence ‘Rose saw the man with the binoculars’ mean?

– The sentence ‘Rose saw the man with the binoculars’ means that Rose saw a man that had binoculars. It is unclear if the man was using the binoculars at the time Rose saw him, but the sentence implies the man at least had them with him when Rose saaw him.

– Does this sentence have more meanings?

– There are no other meanings for this sentence unless there is additional context that is important for the meaning. The sentence shows a simple event where Rose saw a man who was carrying binoculars. If there was additional information about this event, the meaning of the sentence could change. But on its own the sentence has one meaning: that Rose saw the man with the binoculars.

So ChatGPT does not understand what you are saying, although it may seem to. Yet the bot will always say “yes” when we ask if it understands us: ChatGPT has learned that this is the most likely answer, but will never be able to understand why.