What was the main question in your dissertation?

Our ability to use language to communicate with each other is at the heart of what makes us human. However, languages around the world differ in their sounds, words, and grammar. Consequently, there are sometimes big differences between languages in the meanings that they express. From previous research, we know that due to this diversity, speakers of different languages see the world with different eyes. A good example of this is colour perception: people can recognise a difference in colour more efficiently when they use a distinct word for it in their language.

Our ability to use language to communicate with each other is at the heart of what makes us human. However, languages around the world differ in their sounds, words, and grammar. Consequently, there are sometimes big differences between languages in the meanings that they express. From previous research, we know that due to this diversity, speakers of different languages see the world with different eyes. A good example of this is colour perception: people can recognise a difference in colour more efficiently when they use a distinct word for it in their language.

In my dissertation, I wanted to investigate if this also holds for users of spoken languages compared to users of sign languages. But instead of looking at differences in words for colours, I looked at a fundamental difference between spoken and sign languages, that is, modality: not the invisible vocal cords but the visible parts of the body, using hand, face and body movements are used in signing. In my dissertation, I studied whether this difference in modality (vocal versus visual) influences hearing speakers and deaf signers to look at the world differently. I also explored what happens if one person knows both a sign language and a spoken language. Do they talk and sign differently because the two languages influence each other? And how does this affect the way they look at the world?

Can you explain the (theoretical) background a bit more?

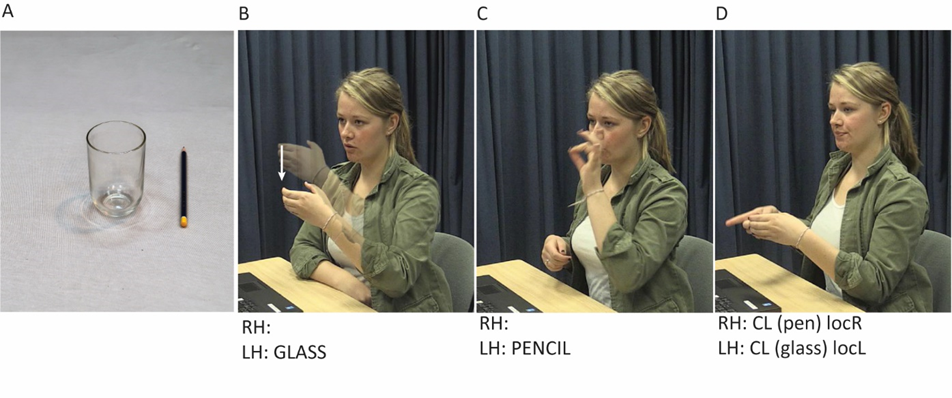

I chose to study how people communicate spatial relations because we know that spoken and sign languages differ immensely from each other in this specific domain. In particular, I studied descriptions of pictures that show two objects, such as pen and glass. These objects were in a spatial relation to each other. For instance, the pen was to the right of the glass.

There are two main differences in the way speakers and signers can describe the pictures: (1) By using the hands and other visible parts of the body, signers can depict objects and their spatial relation by mirroring these aspects with their hands and placing them in front of the body. This visual mirroring of spatial relations, which I call “iconicity” in my thesis, is not possible in speech; (2) Signers of many sign languages use a consistent order in which they mention the two objects (e.g. pen and glass), that is, bigger objects such as the glass first and smaller objects such as the pen second. As spoken languages do not use such consistent order to describe such relations, this seems to be driven by using the hands to describe spatial relations.

Considering these two differences, I created an experiment which combined picture descriptions with an eye-tracking experiment. This way, I investigated possible relations between the way hearing speakers, deaf signers and hearing bilinguals of a sign and a spoken language describe pictures of spatial relations and how they look at these pictures.

Why is it important to answer this question?

With the enormous diversity in languages used around the world and the fact that about 60% of us speak more than one language, it seems impossible that we all think and perceive the world in the same way. By studying possible interactions between language and cognitive processes we learn about how people from different cultures and languages might differ in the way they think. Unfortunately, sign languages are still understudied compared to spoken languages and the type of experiments discussed in my thesis has barely been conducted with signers before. I think it is really important to include sign languages in this line of work as well. After all, sign languages are languages too.

Can you tell us about one particular project? (question, method, outcome)

My dissertation chapters are all based on one big project. Each chapter focuses on different aspects of the same experiment with the same participants. In particular, hearing speakers of Dutch, deaf signers of Sign Language of the Netherlands (NGT), and hearing bilinguals of Dutch and NGT were seated in front of a laptop and always saw four pictures on the screen. Each picture showed the same two objects (e.g., glass and pen) but in four different spatial relations (e.g., left/right/front/on). The participants’ task was to describe one of the pictures, indicated by an arrow, to a trained interlocutor. The interlocutor’s hearing status matched that of the participant, that is, hearing speakers described pictures to a hearing speaking addressee and deaf signers were paired with a deaf signing addressee. With a little infrared camera in front of them, I tracked where on the screen they looked. I wanted to see which picture participants focused on and how often they looked at it and compare these data between hearing speakers and deaf signers based on the different modality they describe those pictures in their own language.

What was your most interesting/ important finding?

In sign languages, descriptions often visually mirror the spatial scene, whereas spoken descriptions often contain non-iconic words like “left”. Does this difference cause signers to look at these spatial relations differently than speakers? This was the main question in Chapter 2 of my dissertation. I found that hearing speakers mostly look at the picture they have to describe -the one with the arrow – while they are planning to speak. By contrast, deaf signers increasingly looked at all four pictures when they were planning to sign, even though they only had to describe one. I argued that this is because signing about spatial relations might require extra attention in order to disambiguate how to form and shape the hands and where to place them in front of the body.

What are the consequences/ implications of this finding? How does this push science or society forward?

The type of work presented in my thesis can have a profound impact on the debate over using sign language with deaf and hard of hearing children who have received cochlear implants. These are high-tech medical devices that help the deaf perceive sound. Often, medical professionals advise against the use of sign language after receiving such implants, arguing that sign language would hinder the development of speech. My findings on bilinguals’ language use provide evidence that signing does not harm speech development.Influences across languages can occur naturally in bilinguals and are not an indication that bilinguals are confused. On the contrary, these influences provide evidence that bilinguals are sensitive to grammatical and sociolinguistic constraints in both languages.

Based on my findings, we suggest that acquiring both sign language and spoken language from early on (in bilingual education) might enrich speech and communication. One of the goals of my thesis was to increase awareness on language practices in the deaf community. Bimodal bilingual language development (i.e. NGT and spoken Dutch) should be accepted as much as bilingual development of two spoken languages (e.g., Dutch and English).

What do you want to do next?

I would like to apply what I learned during my PhD, in terms of content and skills, to support other researchers to make their research lives better. Currently, I am part of the Research Services group at the Institute for Management Research at Radboud University to work as Data Steward. Here, I support the ethical committee, support developing ICT data infrastructure and coordinate research data management.

Interviewer: Julia Egger

Editor: Caitlin Decuyper, Sophie Slaats

Dutch translation: Veerle Wilms

German translation: Bianca Thomsen